Site content by Julian Oliver Dörr and Sebastian Schmidt

This site introduces the Mannheim Web Panel (MWP) – a panel dataset of website contents extracted from corporate websites for a large sample of German companies.

The MWP was developed at the ZEW – Leibniz Centre for European Economic Research in the Project Business and Economic Research Data Center (BERD@BW).

Why corporate websites?

Company websites pose an important source of economic data used by firms to spread product and service information (related to establishing a public image), to conduct transactions (e-business processes) and to ease opinion sharing (electronic word-of-mouth) (Balzquez & Domenech, 2018). Recent economic studies have used corporate website data to:

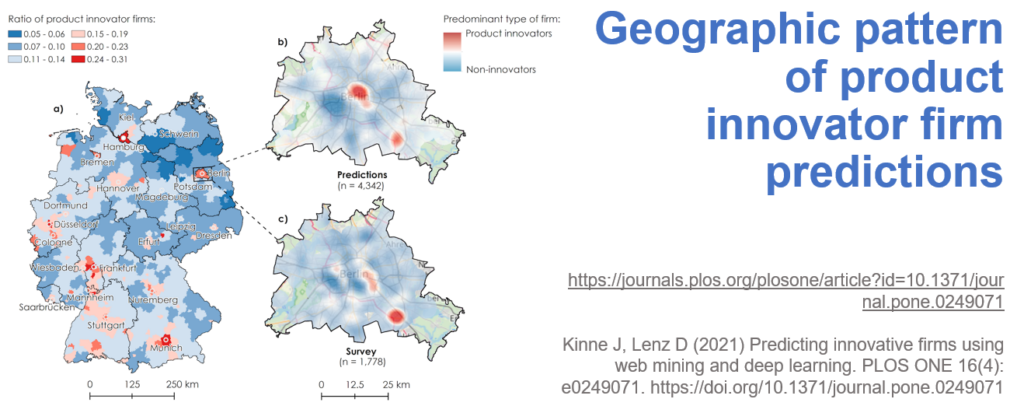

- predict firm innovativeness (Gök et al., 2015, Kinne & Lenz, 2021; Axenbeck & Breithaupt, 2021)

- examine market entry strategies (Arora et al., 2013)

- examine enterprise growth (Li et al., 2016)

- monitor firm export orientation (Balzquez & Domenech, 2017)

- track crisis impacts on the corporate sector (Dörr et al., 2021)

- …

Why panel data?

Firm characteristics, diffusion processes such as technological advances and technological adoption as well as business relations are clearly not static but evolve over time. It requires a continuous monitoring of corporate websites to capture this information. For this reason, the ZEW – Leibniz Centre for European Economic Research scrapes corporate website contents since 2018 and has established a panel format of these contents updated every three to six months.

Is the data available for researchers?

The MWP data is stored in ZEW’s cloud structure (Seafile) and can be accessed by externals for the purpose of research via ZEW’s Research Data Centre (ZEW-FDZ). Upon signing a licence agreement, interested users will have full access to the MWP in a secured environment provided by ZEW. For more details please contact:

How is the data structured?

The MWP includes a large amount of web data from corporate websites of German companies. The general scraping framework used to establish the MWP is available on Github. The scraping parameters for the MWP are standardized. For each website, the first 50 subpages are downloaded with shorter URLs in the corporate web-domain scraped more likely. Webpages which are in German language are preferred in the scraping process such that the majority of text content in the MWP is in German.

In the following, we will describe the data structure and access to the panel in more detail using Python. Note that the data can be accessed by any other programming language as well. The Seafile client that allows you to access the MWP from your local machine corresponds to “Q:\” drive in the following. Set the relevant drive on your machine in the cell below:

drive = "Q:\\"

In total, the MWP includes approximately 740 GB of data (as of April 2021) and information on more than 2.7 million firms. However, only a part of these firms is included in each wave. Thus, the MWP comes with a file showing for each firm whether it had been scraped successfully in the respective wave (in the table below 1 indicates a sucessful scraping attempt of the respective corporate website). In general, web data is available for the following dates:

- December 2018

- April 2019

- August 2019

- December 2019

- March 2020

- May 2020

- August 2020

- October 2020

- January 2021

import pandas as pd overview = pd.read_csv(drive + r"Für mich freigegeben\Mannheimer Webpanel (MWP)\Übersicht.csv", delimiter='\t') overview.head(5)

| ID | url | 2018_12 | 2019_04 | 2019_08 | 2019_12 | 2020_03 | 2020_05 | 2020_08 | 2020_10 | 2021_01 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2010000001 | www.wiener-conditorei.de | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 1 | 2010000057 | www.hoernicke.de | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 2 | 2010000074 | www.psi.de | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 |

| 3 | 2010000125 | www.scherdel.de | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 |

| 4 | 2010000144 | www.kleinschmidt.de | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 |

5 rows × 13 columns

Each wave is stored within a corresponding directory in Seafile and is split up in multiple chunks (generally about 100-150 MB each). These chunks are csv-files containing the scraped webiste information including:

- ID: ID of the company

- dl_rank: chronological order the webpage was downloaded (main page = 0)

- dl_slot: domain name of the website

- alias: domain of website if there was an initial redirect (e.g. from www.example.de to www.example.com)

- error: indicating if there was an error when requesting the website’s main page (e.g. HTML error, timeout)

- redirect: equals “True” if there was a redirect to another domain when requesting the first webpage from a website

- start_page: first webpage that was scraped

- title: title of the website as indicated in the website’s meta data

- keywords: keywords as indicated in the website’s meta data

- description: short description of the website as indicated in the website’s meta data

- language: language of the website as indicated in the website’s meta data

- text: text that was downloaded from the webpage, including the respective HTML tags

- links: domains of websites (within-sample and out-of-sample) found on the focal website

- timestamp: exact time when the webpage was downloaded

- url: URL of the requested webpage

For some of the older waves, information on title, keywords, description and language are not included.

How can the data be accessed?

The files can be accessed for further analysis e.g. by using pandas. For this, the following modules are necessary.

import pandas as pd import os import glob

How can singular files be accessed?

Singular files can be accessed by path, as in a regular folder structure. Their names always follow the same patterns (ARGUS_chunk_p*).

# Define which wave you want to access wave = "2020_03"

singular_file = pd.read_csv(drive + r"Für mich freigegeben\Mannheimer Webpanel (MWP)\\" + wave + r"\ARGUS_chunk_p12.csv", delimiter='\t') singular_file.head(5)

| ID | dl_rank | dl_slot | error | redirect | … | description | text | timestamp | url | links | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2011140655 | 0 | juwelo.tv | None | True | … | Edelsteinschmuck in allen Variationen günstig … | Ihr Experte für zertifizierten Edelsteinschmuc… | Tue Mar 17 18:18:25 2020 | https://www.juwelo.de/ | juwelo.fr,juwelo.nl,juwelo.es,juwelo.it,juwelo… |

| 1 | 2011140655 | 1 | juwelo.tv | None | True | … | Liebhaber eleganter Schmucktrends sollten sich… | Ihr Experte für zertifizierten Edelsteinschmuc… | Tue Mar 17 18:18:25 2020 | https://www.juwelo.de/tpc/ | juwelo.fr,juwelo.nl,juwelo.es,juwelo.it,juwelo… |

| 2 | 2011140655 | 2 | juwelo.tv | None | True | … | Juwelo – AGB. Schmuckpräsentation und Herstell… | Ihr Experte für zertifizierten Edelsteinschmuc… | Tue Mar 17 18:18:25 2020 | https://www.juwelo.de/agb/ | juwelo.fr,juwelo.nl,juwelo.es,juwelo.it,juwelo… |

| 3 | 2011140655 | 3 | juwelo.tv | None | True | … | Ringe besetzt mit wunderschönen Edelsteinen, v… | Ihr Experte für zertifizierten Edelsteinschmuc… | Tue Mar 17 18:18:25 2020 | https://www.juwelo.de/ringe/ | juwelo.fr,juwelo.nl,juwelo.es,juwelo.it,juwelo… |

| 4 | 2011140655 | 4 | juwelo.tv | None | True | … | Schmuck mit echten Edelsteinen von Dagen ist n… | Ihr Experte für zertifizierten Edelsteinschmuc… | Tue Mar 17 18:18:25 2020 | https://www.juwelo.de/dagen/ | juwelo.fr,juwelo.nl,juwelo.es,juwelo.it,juwelo… |

How can an entire wave be accessed?

Data from an entire wave can be accessed by looping over the csv-files. Keep in mind that the files are very large which might cause problems with your memory. Therefore, it is sensible to filter only the data you need in the loop.

# input directory

input_dir = drive + r"Für mich freigegeben\Mannheimer Webpanel (MWP)\\" + wave

os.chdir(input_dir)

# get all files in input directory

input_files = sorted(glob.glob('ARGUS_chunk_*.csv'))

# structure for exemplary code

tick = 0

output = pd.DataFrame(columns=['number', 'percentage_errors'])

error_count = []

# loop over chunks

for input_file in input_files:

#########################################

# Exemplary calculations based on the MWP

#########################################

# Search for the following search term

search_term = "digital"

tick += 1

if tick > 2:

break

# load chunk in chunks due to file size

for data in pd.read_csv(input_file, chunksize=20000, sep = "\t", encoding='utf-8', error_bad_lines=False):

data = data.loc[data['text'].notnull(),:]

temp = set(data.loc[data['text'].str.contains(search_term), 'ID'].values)

temp.update(temp)

print(temp)

{2011052555, 2011057167, 2011061267, 2011027478, 2011052569, 2011047456, 2011063331, 2011025957, 2011042854, 2011026988, 2011041328, 2011058738, 2011066931, 2011044404, 2011059767, 2011046456, 2011065399, 2011024957, 2011022407, 2011027528, 2011029065, 2011024458, 2011032141, 2011044944, 2011052113, 2011074132, 2011028054, 2011053145, 2011036769, 2011060324, 2011037285, 2011048551, 2011031664, 2011025524, 2011065460, 2011054717, 2011032191, 2011062922, 2011040399, 2011026066, 2011024534, 2011051159, 2011027609, 2011041434, 2011049641, 2011017900, 2011016884, 2011053238, 2011072700, 2011031742, 2011043006, 2011062467, 2011032260, 2011015882, 2011055309, 2011029199, 2011063504, 2011047633, 2011024084, 2011023572, 2011066072, 2011012323, 2011046115, 2011063526, 2011028715, 2011053291, 2011051253, 2011058950, 2011063047, 2011023116, 2011045137, 2011051289, 2011024668, 2011025693, 2011049244, 2011033888, 2011044129, 2011043618, 2011043106, 2011050273, 2011036453, 2011052846, 2011052849, 2011044673, 2011049798, 2011050827, 2011062107, 2011040097, 2011034981, 2011021157, 2011044710, 2011029352, 2011030889, 2011041646, 2011039087, 2011039599, 2011030898, 2011041141, 2011044213, 2010999159, 2011037566, 2011051910, 2011051399, 2011039626, 2011048332, 2011036047, 2011030934, 2011038616, 2011039129, 2011027866, 2011042723, 2011062695, 2011037611, 2011026860, 2011040173, 2011032494, 2011049389, 2011025844, 2011062204, 2011025346, 2011032003, 2011054536, 2011042258, 2011059155, 2011048405, 2011040219, 2011027945, 2011037677, 2011041782, 2011025916, 2011055615}

The above output shows company IDs on whose corporate website the search term digital matched. This shall just give a very high level example on how to work with the MWP.

Can the MWP be combined with other data?

Please note that it is possible to combine the MWP with further firm-level data hosted at ZEW, such as for example the Mannheim Innovation Panel (MIP) and the IAB/ZEW Start-up Panel. This is how the MWP realizes its full potential. Find out more on the website of the ZEW-FDZ.

The Mannheim Web Panel was developed as part of the project BERD@BW funded by the Ministry of Science, Research and the Arts of Baden-Württemberg from 2019 to 2022.